It is a truth universally acknowledged among bioinformaticians that 90% of the stuff that bioinformaticians do is to convert files from one format to another. One tool outputs format A, and the next tool you want to use needs format B as input. As a consequence, there is a slew of different file format conversion tools out there, and a google search done at the time of writing returned about 2.5 million results on the search sentence “bioinformatics file format conversion”. A personal favorite of mine for format conversions is the “seqret” tool which is found in the EMBOSS package. Yes, it has been around the block for a while, but it will do most of the things that are needed. That tool, together with some unix commands, such as sed, awk and grep can usually get you pretty far along where you need to go.

However, every once in a while a format and a need for a specific output comes along, and it just turns out to be easier to throw some code at it, rather than to make bash hacks (Yes, I know many count bash hacks as code, and sometimes it can be. However, for most people it just isn’t). This was the situation I found myself recently. I am working with some folks to look at virulence genes in a specific set of genomes. Now, there are a lot of ways of doing that, but in 98% of all cases, this comes down to good old BLAST. The main way of doing this is to take the set of genes you are looking for and blast them against your genomes. Then, apply some filters on said blast results, and there you have it. Most specific gene finders (which is what I call MLST finders, resistance gene finders, virulence finders, and whatnot-finders) work in this way. However, some of them are easier to install and use. And in my case, more importantly, for some of them it is easier to understand how to create your own set of genes to look for. In this case, I did not do that, but that is likely to happen later. Thus, tools that allow me to do that without having to jump through too many hoops are preferred. In this case, enter abricate, a nice little perl tool written by Torsten Seeman fits the bill nicely. Yes, it is perl, which can be an install nightmare, but there is sensibly a conda package that allows you to skirt those issues. Thus I proceeded to run my analyses. Lo and behold virulence genes were found, and there was much rejoicing.

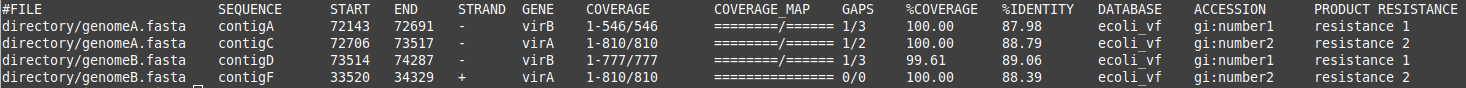

Then came the next question: could I get the gene sequences of the genes found by abricate out of the genomes somehow? My collaborators suspect some foul play might be going on here. Now, this is what the format of abricate output files look like:

As you can see, this is a normal tabular output format, not too different from what you see in other situations. In order to extract the gene sequences, I would need to grab the genome sequence, find the contig the gene we are thinking of is in, then cut it out of the contig in question using the start and stop positions, and stuff it into a fasta file. Now, cunning readers might look at the format and think “bedtools“or “seqkt”, and they would not be wrong. To use those, you would need to have one file per genome that contained the results of the search. Then you would need to use something like awk to grab the contig column, as well as the start and stop columns and the strandedness of the sequence. However, seqtk can’t use the strandedness, so there goes that option. Bedtools can use it, so that could work. But, it looked like doing that would be a lot of work. Also, in all likelihood, the people I collaborate with would want to extract several different genes. Thus I would need something that would be quick and easy to do whenever the situation should arise. Also, coding is a lot more fun than putting together less-than-reproducible bash hacks. Thus my tiny little python script called “abricate-extract.py” was born. Now, the code is not pretty, and it is not very well documented, but it does work. It showcases the fact that another favorite of mine, biopython, is very useful and can save you a lot of time. Also, sometimes classes just make sense. The script takes in an abricate output file and the gene you are looking to extract, and extracts that from all of the genomes the analyses found a match on. This will then be put into a fasta output file for all to enjoy.

There you have it, hope you found this useful somehow!